Warning: This article does NOT contain a TL;DR version. Everything in this article is 100% relevant, informative and educational. You're here to stay, wether you like it or not. If you attempt to close this tab without reading everything, your mouse might catch fire and your desk with burn. Or not. It all depends on the chance of that to actually happen. You were warned.

Off topic

Time flies. Seems like only yesterday I wrote my last post.

Reads date stamp: 20 September 2021…

Well, it does and since then and until now, a lot has happened.

I’ve learned a lot of new things, I’ve met a bunch of interesting people, I’ve experimented with a lot of technologies and on a more personal level, my kid is growing like a champ. And us with him.

Ain’t life wonderful?

One of the first things I did last year, was to get into Ansible. I had experimented a bit with it in the past, but not much.

I’m not an expert, by all means, but I know my way around it pretty well.

I also got ok-ish at basic kubernetes stuff too. I know enough to get myself into trouble, but also get myself out of trouble. Those who know me, know what I mean. Wink!

They don’t. All of my friends have no understanding of this… alien language.

I managed to deploy Zabbix in HA mode and although it had some performance issues at first, with a database growing too large and items queueing like crazy, I sorted that too. Almost.

Oh, this was part of a migration from an older version of Zabbix to the newest.

It was all quite new to me but it all worked fine in the end.

All supported by, you guessed it; Ansible!

You didn’t guess it, did you?

I actually want to write two whole articles about that, as it was something so simple, yet so elusive. Not the migration. That was easy.

The database size and queueing stuff. That was stupid to happen in the first place and mainly due to poor documentation.

That was off-topic. Now back to it.

On Topic

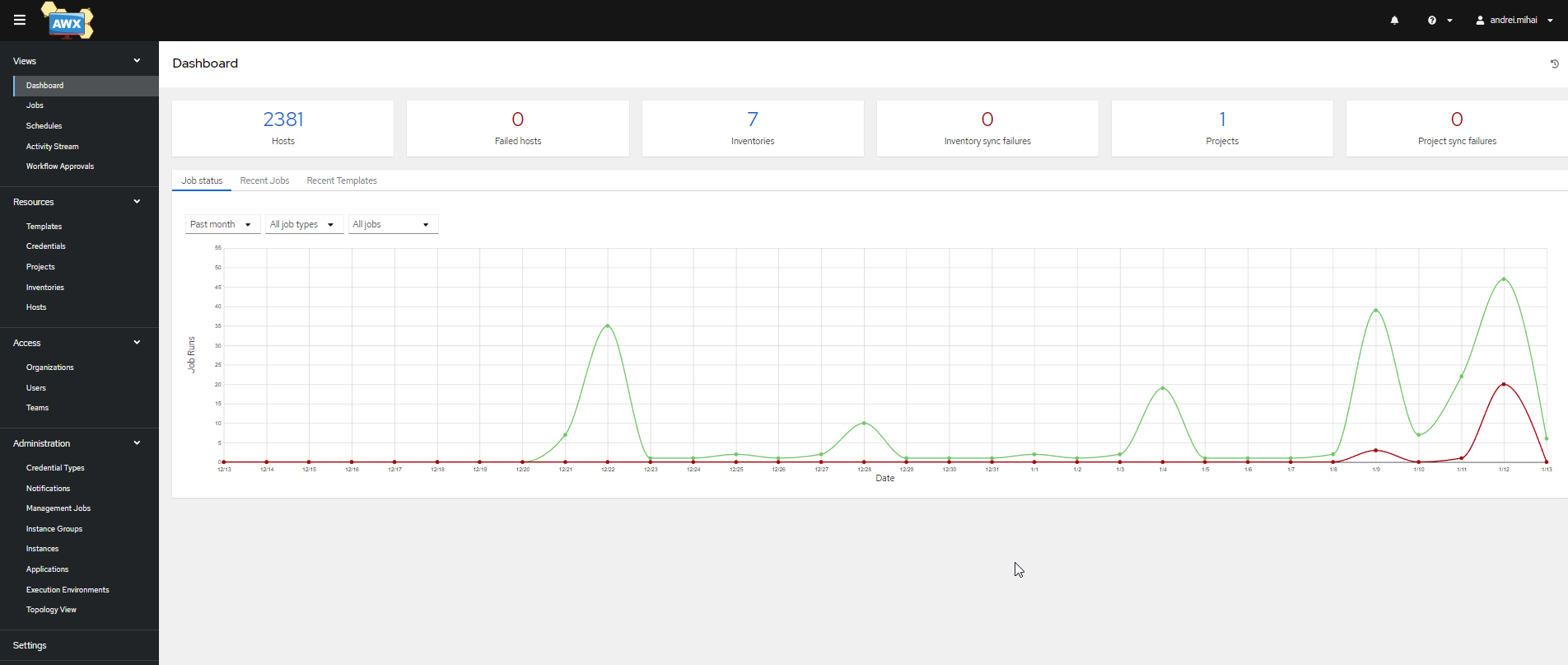

The Red Hat Ansible Automation Platform is based on AWX.

In simple terms, Ansible AWX is a platform that allows you to run Ansible playbooks through a nice web-ui.

It does a lot more than that, in fact. Something we’ll probably explore in the future, but today I want to show you how to deploy Ansible AWX on a kubernetes clusters, in a few, easy steps as well as provide some of the facts that come with this type of deployment.

I will try to split this into blocks so that it is easier to parse and understand.

I hope that by the end of the article you’ll have a working AWX instance.

Laughs maniacally

AWX can be installed on any modern Linux distribution that supports kubernetes. You can use a VM, WSL2 with systemd(tricky to use but works, if you are absolutely out of your mind), or a bare-metal server.

Choosing your environment

Now, to start with the serious stuff.

You can run it on 2CPUs and 4GB RAM easily, although you might see some hits in performace from time to time.

On such systems, I highly recommend dedicating the system only to AWX.

We will build AWX with an external database. For the project we need postgresql 14.

If possible, I recommend using a second server for the database. If not, local works too.

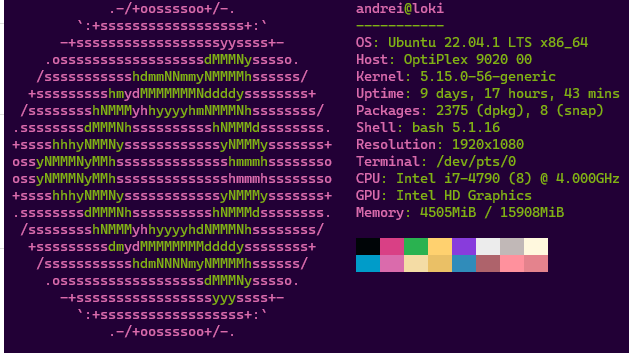

My own home instance tends to be a bit slugish on the following system, although this is not a dedicated server for the AWX services.

For this article, we will use Ubuntu 22.04, however, I have successfully used Fedora 35-36, Oracle Linux 8.5 and Rocky Linux 9 to host AWX.

For those that want to skip reading this article, you can find my own repo with full instructions here.

Note thatkubeDeploy.sh works on Ubuntu while kubeDeploy_worker.sh works on RHEL/Fedora based systems. That particular script was written on Fedora 35 and was tested on Fedora 35, 36 and 37, Alma Linux 8 and 9, Rocky Linux 8 and 9 as well as Oracle Linux 8.

The k8s groundwork

The most basic AWX installation requires a single node Kubernetes cluster. This is also called the Control Plane, in Kubernetes nomenclature.

A single node Kubernetes cluster does not require much in terms of resources.

I made things very easy to use and understand at the same time, when I created the kubeDeploy.sh script. The script works as is. No need to make any changes.

sudo firewall-cmd --permanent --zone=trusted --add-port=6443/tcp --add-port=2379-2380/tcp

sudo firewall-cmd --permanent --zone=trusted --add-port=10251/tcp

sudo firewall-cmd --permanent --zone=trusted --add-port=10252/tcp

sudo firewall-cmd --permanent --zone=trusted --add-port=10250/tcp

sudo firewall-cmd --permanent --zone=trusted --add-port=30000-32767/tcp

sudo firewall-cmd --permanent --zone=trusted --add-port=80/tcp

sudo firewall-cmd --permanent --zone=trusted --add-port=443/tcp

sudo firewall-cmd --permanent --zone=trusted --add-port=22/tcp

sudo firewall-cmd --reload

sudo firewall-cmd --list-all

sudo modprobe overlay

sudo modprobe br_netfilter

sudo cat << EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

sudo cat << EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

sudo sysctl --system

sudo apt update

sudo apt install containerd

sudo mkdir -p /etc/containerd

sudo containerd config default | sudo tee /etc/containerd/config.toml

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml

sudo systemctl restart containerd

sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

sudo echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt update

#apt-cache policy kubelet | head -n 20

#VERSION=$(apt-cache policy kubelet | head -n 7 | tail -n 1 | cut -d' ' -f 6)

#apt install -y kubelet=$VERSION kubeadm=$VERSION kubectl=$VERSION

sudo apt install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl containerd

sudo systemctl enable --now containerd kubelet

sudo kubeadm init --ignore-preflight-errors=all

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

kubectl get nodes

kubectl get pods -APerhaps uncomment the commented lines to install the previous to last version of each package and exclude them from being updated. This if fairly important, so it’s fitting I would place this instruction here.

If you’re already using Ansible, you might also want to consider using my very own playbook, although some minor tweaking is required, as it’s rather personalized.

As per the official docs, 2GB RAM/2 CPU are enough for a basic cluster. More never hurts, except maybe your wallet.

Some other things you need to take into account when creating a kubernetes cluster, that you may miss if not specified.

- Disable swap. This gave me plenty of headaches at one point. Looking at you, zram.

- Disable the host firewall OR add every rule required. With a firewall disabled, for a non-public interface, you don’t need to worry about adding rules every time. On a public interface, though, things change.

- Be mindful of SELinux. See my other article.

manandjournalctlare your friends.

The playbook handles swap and makes sure the firewall is disabled. The script does the opposite.

Feel free to add your own syntax to the script to achieve the task.

I won’t sue you.

As I use the playbook(s) for this task, I could not be bothered with tweaking the script.

The script(s) are for non-ansible people and are more of an orientative, educational way of automating k8s deployments.

The quick and easy way is to just run the script.

Remember, that the script was made for Ubuntu, so if you’re on SUSE or RHEL, you’ll need to adjust the syntax.

Most of the steps for RHEL systems can be found in the kubeDeploy_worker.sh script.

For other distributions like Manjaro, find your own way.

modprobe overlay

modprobe br_netfilter

cat << EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

cat << EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

sudo sysctl --system

sudo yum update

sudo yum install containerd

mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml

systemctl restart containerd

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

yum update

#VERSION=$(yum list --showduplicates kubectl --disableexcludes=kubernetes | tail -n 2 | head -n 1 | cut -d' ' -f24)

yum install -y kubelet kubeadm kubectl

systemctl enable --now containerd kubeletThe commented line in this script has a purpose. Can you figure out what it is?

Hint: control plane and workers should to be on the same version.

A fitting place for another important bit.

So provided you went ahead and just ran the scripts, you more than likely ran into some issues. If you didn’t then either you know what you’re doing or you followed someone else’s instructions.

If neither, then you’re in luck, my friend. You should play the lottery.

Try not to mess this up

When it comes to databases, I’m a sucker. I know a few queries here and there, I understand the principles and I try to do my best, or at least I’m good at googling.

Heck, last week I partitioned a live 300+GB mysql database with no disruptions. In my sleep. No, I didn’t mean I dreamt it. I just left the process running overnight.

I did dream I was The Joker, one night though. Weird.

So the point is to install a supported version of postgresql.

Aligning with the official docs, postgresql 12 is a minimum, although my script installs postgres 14.

I don’t have the script in my own repository, for some unknown reason, but I am willing to share it here, for now.

This is for RHEL based systems, so you’ll need to adjust for Ubuntu. There are some commented lines that will also help you upgrade postgresql if you’re running on an older version.

if [ -z $1 ]

then

echo -e Please run this script as follows:\n ./pg14.sh yoursupersecretpassword \(optional\)your_database_file.sql

#update

else

sudo dnf update -y

#install deps

sudo dnf -y install gnupg2 wget vim tar zlib openssl

#get repo

sudo dnf install https://download.postgresql.org/pub/repos/yum/reporpms/EL-9-x86_64/pgdg-redhat-repo-latest.noarch.rpm -y

#disable old pg

sudo dnf -qy module disable postgresql

#install new pg

sudo dnf install postgresql14 postgresql14-server postgresql14-contrib -y

#init new pg

sudo /usr/pgsql-14/bin/postgresql-14-setup initdb

#enable the service.

sudo systemctl enable --now postgresql-14

# note that the default location for all files has changed from

# /var/lib/postgresql/data/pgdata/ to /var/lib/pgsql/14/data

# if you're on a distribution that's not fedora or opensuse downstream, then...perhaps it's in /etc/.

# luckily, I have your back.

# we need to do a few things, before we make the database available to k8.

# first, we need to change local connection controls. This allows us to run psql commands locally.

sudo sed -i.bak 's/^local.\{1,\}all.\{1,\}all.\{1,\}peer/local all all trust/' /var/lib/pgsql/14/data/pg_hba.conf | grep trust$

#then we need to allow remote connection controls, for the newly created database. This allows us to run psql commands remotely. pods and tasks need this.

#DO NOT DO THIS ON A PUBLICLY FACING SERVER

sudo bash -c "echo host awx awx 0.0.0.0/0 md5 >> /var/lib/pgsql/14/data/pg_hba.conf" | grep awx

#then we need to update the posgresql.conf to listen for external connections. This is so we can connect to the DB, from our pods.

sudo sed -i.bak "s/^#listen_addresses = 'localhost'/listen_addresses = '*'/" /var/lib/pgsql/14/data/postgresql.conf | grep listen

#restart to apply changes

sudo systemctl restart postgresql-14.service

#create a database.

psql -U postgres -c "create database awx;"

#create the role.

psql -U postgres -c "create role awx with superuser login password '$1';"

#test the connection.

psql -U awx awx -h localhost -c "\c awx;\q;"

#You are now connected to database "awx" as user "awx".

#^means succes.

echo "We are done. We can proceed with the K8s setup now."

#if we have a database that we need to restore, then we need to perform a few more steps:

#1. restore the DB

#

if [ ! -z $2 ]

then

psql -U awx awx < $2

#

#2. Done.

fi

fi

#NOTE: When restoring a database, the next AWX deployment will likely also upgrade the database, so the deployment can take a while.

#NOTE2: Don't forget to claim your secret-key secret from the old AWX instance. You'll need this for the AWX deployment to connect with your restored database.Just read through the comments if you don’t know that the script does. Mură-n gură…

I hope you made that work for yourself. If not, tough luck. Did I not tell you that this is the road to perdition? Must’ve forgot…

Many learn by doing, so this is your chance. If you already did and already know, then great. You’re a star!

Once this step is complete, you have a K8s cluster running and a database ready for your AWX service. With these two requirements complete you’re half-way to 30% there.

What comes next is the interesting part. And obviously I have a script for that too, but desert always comes after the main course, so we’ll have to dig in first.

Manifests time!

This is the pure kubernetes part that most despise. I did too, but then I learned to love it. A sort of Stockholm syndrome, if you will.

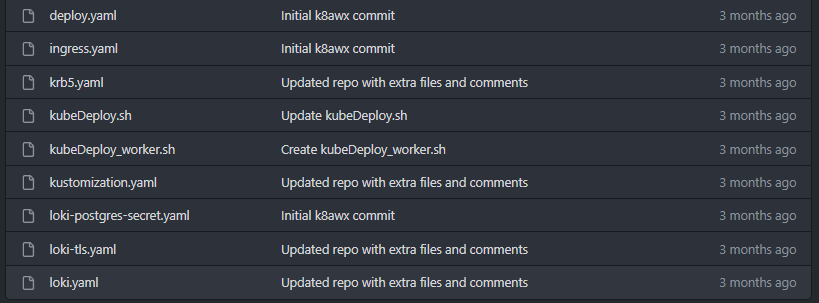

From a bird’s eye view, this is what you need:

Let me explain.

Ignoring the .sh scripts, the following files do the following things:

- loki.yaml – this is the equivalent of awx-demo.yaml in the official documentation.

It defines your type of instance, the HTTP port it will be accessible on(use 30080, trust me), type of ingress, hostname, etc. This file is responsible for the deployment of your AWX service. I suggest reading the docs to better understand what each specification does.

I named it loki, because that is what the server name is. - loki-tls.yaml – This is your TLS secret. You will need one if you want to access the service over port 443.

Go here to learn how. You can use a self-signed cert.

Note that the name of the secret needs to be specified in the main file, as theingress_tls_secret. - loki-postgres-secret.yaml – It contains the connection details for your database, which you created in the previous step.

If the pg14 script did its job, you’ll only need to specify the host and the secret in this file.

Note that the name of the secret needs to be specified in the main file as thepostgres_configuration_secret. - ingress.yaml – It creates an ingress point which allows you to access your new AWX site over port 443, instead of the standard nodePort you have selected in the main manifest. More on that later. As in never.

- krb5.yaml – It creates a kerberos configuration and attaches that to your awx instance as a volume mount, so that you can manage domain joined Windows hosts. Tweaking is required as I have used an example configuration.

I recommend that you copy the /etc/krb5.conf contents from one of your domain-joined linux hosts and paste it under thekrb5.conf: |section.

You don’t need this if you’re managing non-domain joined devices, or only Linux hosts in a LAN. So for your home-lab, you can remove this file or ignore it completely.

By the way, if you want this configuration to work, you’ll probably need to use my own Execution Environment. - deploy.yaml – Is this. It creates an ingress-controller.

You’ll need this for https access. - kustomization.yaml is the manifest file that lists all the files needed for the deployment. We use kustomize for this task. Kustomize is installed by the awxSetup.sh script.

Now that we’ve described all files, it’s important to make sure we have everything we need setup correctly.

For example, the kustomization.yaml manifest looks like this:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

# Find the latest tag here: https://github.com/ansible/awx-operator/releases

- github.com/ansible/awx-operator/config/default?ref=0.29.0

- loki-postgres-secret.yaml

#uncomment below if you have updated and want to use the kerberos extra volume/mounts

# - krb5.yaml

- loki-tls.yaml

- loki.yaml

# Set the image tags to match the git version from above

images:

- name: quay.io/ansible/awx-operator

newTag: 0.29.0

# Specify a custom namespace in which to install AWX

namespace: awxNote that the krb5.yaml file is commented.

When you run the kustomize command, kustomize will apply each manifest in the order they appear in the file.

So if you place loki.yaml before loki-postgres-secret.yaml, when the awx service is created it might not know about the connection details manifested in loki-postgres-secret.yaml and your deployment might fail.

My experience tells me that the deployment restarts and when it does, it re-checks everything and finds the connection details.

Also my experience: – You do know you put those in that order in there for a reason, right? Right?!

The final piece of the puzzle

So, as per my own personal tradition, I spend hours writing a piece of text that really explains nothing, as I trully know nothing and anyone who reads it, just has to click a few links and gets all the good stuff served on a silver platter.

Good ol’ Andrei.

As a bonus, the script can also upgrade your AWX instance. Do make sure to include a pg_dump in there, if you care about your saved stuff, otherwise, just use it as is.

I’m not saying upgrading breaks stuff. It doesn’t. But just in case it might…

This is the bit that always gets you the latest version.

if [ ! -d $HOME/awx/k8awx/awx-operator/ ]

then

git clone https://github.com/ansible/awx-operator.git

else

rm -rf $HOME/awx/k8awx/awx-operator/

git clone https://github.com/ansible/awx-operator.git

fi

cd $HOME/awx/k8awx/awx-operator/

ver=$(git describe --tags --abbrev=0)

cd $HOME/awx/k8awx/

sed -r -i s/\([0-9]\{1,\}.\)\{2\}[0-9]\{1,\}/"$ver"/g kustomization.yamlThank me later!

One last thing. After the ingress.yaml is applied, you will be able to access your site for a short period. Then, you won’t.

I’m not yet sure what causes this. All I know is that awx-operator sees that a change has occurred and restarts the installation, which also modifies your ingress by removing its annotation.

So what you have to do is to wait a few minutes for the deployment to finish, then edit the ingress and re-add the annotation.

You can use kubectl patch or kubectl edit for that.

It doesn’t matter which you use. Your ingress should look like this when your un kubectl get ingress -n awx -o yaml

Note the bold bit.

apiVersion: v1

items:

- apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: '{"apiVersion":"networking.k8s.io/v1","kind":"Ingress","metadata":{"labels":{"app.kubernetes.io/component":"awx","app.kubernetes.io/managed-by":"awx-operator","app.kubernetes.io/name":"loki","app.kubernetes.io/operator-version":"1.1.3","app.kubernetes.io/part-of":"loki"},"name":"loki-ingress","namespace":"awx"},"spec":{"rules":[{"host":"ansible.aimcorp.co.uk","http":{"paths":[{"backend":{"service":{"name":"loki-service","port":{"number":80}}},"path":"/","pathType":"Prefix"}]}}],"tls":[{"hosts":["ansible.aimcorp.co.uk"],"secretName":"loki-tls"}]}}'

kubernetes.io/ingress.class: nginx

creationTimestamp: "2023-01-11T10:30:20Z"

generation: 1

labels:

app.kubernetes.io/component: awx

app.kubernetes.io/managed-by: awx-operator

app.kubernetes.io/name: loki

app.kubernetes.io/operator-version: 1.1.3

app.kubernetes.io/part-of: loki

name: loki-ingress

namespace: awx

ownerReferences:

- apiVersion: awx.ansible.com/v1beta1

kind: AWX

name: loki

uid: f3308bb7-5ab1-42af-a602-d885be4841d9

resourceVersion: "2471"

uid: 3f00f83b-7368-4daf-8ec2-31e2ff0c0a95

spec:

rules:

- host: ansible.aimcorp.co.uk

http:

paths:

- backend:

service:

name: loki-service

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- ansible.aimcorp.co.uk

secretName: loki-tls

status:

loadBalancer:

ingress:

- ip: 192.168.1.100

kind: List

metadata:

resourceVersion: ""Accessing AWX

The main access details are here. Use those to get your initial secret, then change the password.

Or you can just copy/paste this command. That should work.

kubectl get secret $(kubectl get secret -n awx | grep "admin-password" | awk '{print $1}') -o jsonpath="{.data.password}" | base64 --decode ; echoIf you have any questions, get in touch.

Thank you for reading.